Storing JSON in MySQL is... fine?

Hi. This is mostly a test page for me to test my mdx components and stuff. Although you can still read it. There's no real major breakthrough, it's like a high school projet report.

Introduction

Let's create an imaginary problem. There are no array types in SQL. Suppose we want to store a set of countries.

I have defined a huge list of countries here, it looks like this:

type Country int64

// Taken from https://en.wikipedia.org/wiki/List_of_ISO_3166_country_codes

const (

COUNTRY_AF Country = iota // Afghanistan

COUNTRY_AX // Åland Islands

...

COUNTRY_ZW // Zimbabwe

)

Now there's three solutions I can see:

- Just store it in JSON. JSON gets a lot of hate for being bulky, and there are many encoding schemes like MessagePack whose whole point is to be JSON but smaller. In our case you can even shave off 2 bytes by removing the surrounding braces. You could also opt out of the commas in some cases 1.

-

The best solution I can think of is to store them as a bitset. A bitset is just like, you know, if you can use just a bit, use that instead of a whole machine word.

-

There is a final solution for the real SQL nerds who want to adhere to some kind of religious purity. What they will propose is we create another table that stores the "set of" relationship. For example, if user ID 100 has a set of ['a', 'b'], they want another table called maybe

elements:ID Element 1 a 2 b 3 c And then

user_to_elements:ID UserID ElementID 1 100 1 1 100 2

More details

JSON

So our DB schema will look like this:

CREATE TABLE countries (

id bigint(20) unsigned NOT NULL AUTO_INCREMENT,

countries JSON,

PRIMARY KEY (id)

)

What's more to be said? You just mashal the array into a string and insert it.

Bitset

The schema is similar, but we can reduce it's size by using just BINARY(32).

Since we have around 250 countries, we only need four 64-bit elements to

represent the whole set.

To go to and fro from our set of countries to our bitset, we define these two functions.

type CountryBitset [4]uint64

type Countries []Country

func (c *Countries) ToBitset() CountryBitset {

var e CountryBitset

for _, country := range *c {

arrIndex := int(country / 64)

bitIndex := int(country % 64)

mask := uint64(1) << bitIndex

e[arrIndex] = e[arrIndex] | mask

}

return e

}

func (c *CountryBitset) ToCountries() Countries {

countries := Countries{}

for i, elem := range c {

bitIndex := 0

for elem != 0 {

if elem&1 == 1 {

country := Country(bitIndex + i*64)

countries = append(countries, country)

}

bitIndex++

elem = elem >> 1

}

}

return countries

}

To go to and fro from MySQL, we implement the golang database/sql/driver

Scanner and Valuer interfaces:

func (c *Countries) Value() (driver.Value, error) {

b := make([]byte, 32)

bitset := c.ToBitset()

binary.BigEndian.PutUint64(b[0:8], bitset[0])

binary.BigEndian.PutUint64(b[8:16], bitset[1])

binary.BigEndian.PutUint64(b[16:24], bitset[2])

binary.BigEndian.PutUint64(b[24:32], bitset[3])

return b, nil

}

func (e *Countries) Scan(src any) error {

var bitset CountryBitset

switch val := src.(type) {

case []byte:

bitset[0] = binary.BigEndian.Uint64(val[0:8])

bitset[1] = binary.BigEndian.Uint64(val[8:16])

bitset[2] = binary.BigEndian.Uint64(val[16:24])

bitset[3] = binary.BigEndian.Uint64(val[24:32])

default:

return fmt.Errorf("Unknown type of src: %v", src)

}

*e = bitset.ToCountries()

return nil

}

I think there's not much to explain here. I even wrote tests so you know it works. It even has this "fuzzing" thing which what all the other kids are doing.

Joins

Ok now this is going to be really damn verbose.

CREATE TABLE IF NOT EXISTS users (

id bigint(20) unsigned NOT NULL AUTO_INCREMENT,

PRIMARY KEY (id)

) AUTO_INCREMENT=0 ENGINE=InnoDB;

CREATE TABLE IF NOT EXISTS users_to_countries (

id bigint(20) unsigned NOT NULL AUTO_INCREMENT,

countryid bigint unsigned NOT NULL,

userid bigint unsigned NOT NULL,

PRIMARY KEY (id)

) AUTO_INCREMENT=0 ENGINE=InnoDB;

For writing, you insert the user, and then you can do a batch insert for all the

countries the user has into users_to_countries.

For reading you would also have to perform at least two queries, one to get the user and another to get its countries.

I'm sure you could do some kind of join to make it into one statement. You can use indexing to speed up the reads because it has to scan the whole table otherwise. In any case, you can speed it up, but it's plain stupid from both an engineering perspective and a performance perspective. I'm not gonna boggle my mind with tweaking my DB and SQL statements when I can JUST SKIP IT by using a simpler data format.

Ok from now on I'm not even going to mention this anymore. It's hard to write, and it's also really bad in performance. Preliminary tests on insert speed show it to be at least 3-4 times slower than the other two solutions. The code is in the repo if you want to try it out.

Why JSON should lose

JSON has a lot of overhead and there's no way it's gonna win. First of all, the JSON is bloated. Look at our implementation. The bitset is just four integers. JSON is a string. It can be a lot more than the size of four integers.

Also, JSON needs reflection to be marshalled and unmarshalled. You don't need to do that for four integers man.

Here's another thing. If you're storing JSON in SQL you're most likely going to use something like TEXT 2. In MySQL, the JSON type is actually an alias for LONGTEXT3, which is a bit heavier. The different TEXT objects take anywhere from 2 to 4 bytes of extra overhead per element, probably for some pointers and metadata the engine uses to address the string.

That's all quite trivial, but what's more damning is this statement: "For BLOB and TEXT data, the information is stored internally in a different area of memory than the row buffer." 4 The row buffer is essentially a cache for the DB (much like filesystem buffers) used during reading and writing. And it makes sense that arbitrarily long strings don't get stored into such caches. So this means that such requests should become a lot slower.

Benchmarks and results

Alright so all benchmark code can be found here. It even draws the graph for you after it's done so you can verify my results easily if you want.

Serial RW speed

The benchmark is simple.

I have a bunch of test cases which go from 1000 to 20_000.

For each test case, I just generate n random sets of countries and write them

one by one to the DB.

Here are the results:

So it seems that bitsets are a little over 10% faster than JSON.

Running a quick CPU profile, I can see that for the JSON implementation, the

program spends 42.5% of the time in runtime.schedule compared to 49.5% of the

time for the bitset implementation.

This means the program is spending half of its time idling.

Parallel RW Speed

In this test, I have made the writes parallel.

So now the test dataset is split into n slices for n goroutines to write.

Each goroutine still writes in a serial fashion though, one insert at a time.

I'm running this on a M1 Mac with 8+2 cores. Here's the timing info for different number of workers:

Conclusions

To be honest I would say that a 10% performance gain by moving from JSON to bitsets is pretty good. But I'm sure this scales with the size of the inputs and is not fixed at 10%. We have a huge enum with 250+ elements. A smaller enum would also mean smaller sets.

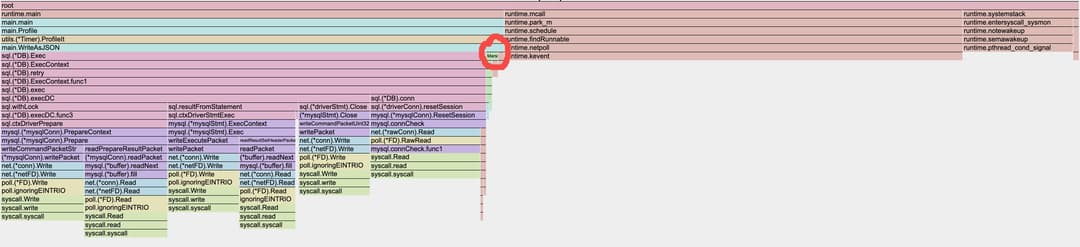

The main bottleneck here is in fact writing to the database. Check out the screenshot from the profiler flamegraph. MarshalJSON is absolutely not the problem here. Most of the time is spent on syscalls.

MarshalJSON circled in red.

Footnotes

-

This is called prefix coding, see for example https://en.wikipedia.org/wiki/Prefix_code ↩

-

Yes in our case we can actually use

VARCHARsince our string will never exceed ~1k characters. But I don't think it's gonna make that much of a difference. ↩ -

https://dev.mysql.com/doc/refman/8.4/en/storage-requirements.html ↩